Overview

Professional and continuing education units (PCEs) spend scarce resources to market noncredit certificate programs and to convert inquiries into registrations as quickly as possible. Some PCEs retain marketing consultants to augment their in-house marketing and registration capabilities, which can result in both increased registrations but also increased costs. Prior to retaining such a consultant, it is worthwhile for PCEs to analyze their existing registration data in order to better understand: 1) how quickly students register after they inquire; 2) if students who register quickly are motivated by different factors than students who take longer to register; and 3) if shorter registration lag times resulted in increased revenue. We asked these questions of University of Delaware noncredit registration data and our findings are described in this paper. Based on our findings, we argue that routine and sustained analysis of the registration data that is hiding in plain sight in PCE databases can help PCEs make better, data-informed decisions about not just marketing and registration but also optimal utilization of marketing consultants. The era of big data is here and PCEs need to analyze their marketing and registration data in order to better do the following: describe their existing processes to key stakeholders on campus; prescribe improvements to it for the benefit of students, instructors and staff; and, ideally, predict registrant behavior, all of which improves the allocation of scarce marketing and registration resources.

In our study, we analyzed the database of registrants in the University of Delaware’s noncredit certificate programs between 2009 and 2015, which consisted of 1,935 students. Specifically, we studied: 1) the median registration lag time by certificate (with lag time defined as the time interval in days between a prospective student’s inquiry and registration); 2) if students registered for the certificates about which they first inquired or if they switched to a different certificate; 3) the inquiry channel (call, survey, web, chat etc.) that was preferred by our certificate registrants and non-registrants; 4) if shorter registrant lag time correlates with increased revenue; and 5) the certificates that had the lowest percentage of registrants to non-registrants, i.e., had the highest likelihood of converting an inquiry into a registration. Finally, we sought to understand the reasons our short lag time students chose to register and if their reasons differed from the overall registrant population.

We found the following:

- The median lag time for 70% (14 of 20) of our certificates was 58 days, or just under two months of student decision-making time.

- There was little variance between a student’s certificate program of interest at the time of their initial inquiry and the certificate for which they registered, indicating that registrants knew which certificate they wanted to take before contacting us—our registration team did not need to sell them on a given certificate.

- There was a modest correlation between shorter lag time registrations and increased revenue, with short lag time registrants tending to favor more expensive certificates, and paying full price, due in part to missing early bird registration discounts.

- Our comparison of registrant and non-registrant data indicates that two of our certificate programs (Social Media Marketing Strategy and Statistical Analyst) had a higher inquiry to conversion rate than our other certificates. Thus, the amount of customer service labor required to convert an inquiry to a registration in these two certificates is less compared with other certificates.

- Most intriguingly, we learned that registrants with lag times of less than 30 days were motivated to register by different factors that the general registrant population. For these short lag time registrants the University of Delaware’s brand value, class size and financial aid were more important than the general population of registrants.

We believe that our research methods are replicable by other PCEs. A cross-functional team of registration and marketing staff could use our research questions and methods to analyze their registrant database to better understand their students’ motivations to inquire and register for continuing education programs. Toward this end, we recommend that PCEs implement the following:

Recommendation 1: Create business processes that routinely prompt staff to extract registration data and analyze it over time. This could be the job of a cross-functional team comprised of registration, marketing, and program management professionals that meets as part of the normal program close-out process at the end of each semester or term. This team could be given the responsibility of using their findings to populate a new registration management dashboard that senior PCE management can use in making resource allocation decisions. To make such an effort successful, the team members need to be curious about the story the registration data can tell them.

Recommendation 2: Leverage PCE staff’s business acumen and tacit knowledge of the noncredit market to, first, make sense of the extracted data, and second, develop creative marketing, customer service and program management uses for the data. The data by itself will not tell a PCE manager how to better market programs and register students. It takes analysis of the data by experienced PCE professionals to make a case for or against a given change in marketing and registration functions. It is this combination of empirical data and the managers’ ability to make sense of it that leads to sound management decisions.

Routine registrant data analysis requires: 1) staff with a basic knowledge of statistical analysis (graduate students from a business school or statistics program make great data analysis interns); 2) management’s willingness to support data analysis as a routine, recognized, and sustained part of the registration or marketing functions in a PCE—episodic use of data is insufficient; 3) an organizational culture open to changing marketing and registration processes based on findings; and, most importantly, 4) a recognition that “you are what you measure,” that it is crucial to select the metrics for a performance dashboard (inquiry to conversion time; variance between initial and final interest area, etc.) that adequately reflects the PCE’s organizational and market context. Finally, it is important to note that data tells only part of an organization’s story to internal and external stakeholders, so complementing data analysis with PCE staff’s experiential knowledge will make for stronger, actionable analysis.

Descriptive Statistics

There were 1,935 students registered in the University of Delaware Division of Professional and Continuing Studies’ noncredit certificate programs between 2009 and 2015. (The data was downloaded from our proprietary Access database to Excel files for ease of analysis.) We conducted a statistical analysis of this population to find relationships between different variables of interest. We calculated the following registration statistics across all certificate programs individually: mean, average, standard deviation, min, max and range. We used median lag times for most of our conclusions. We define lag time as the time between inquiry about a certificate and the registration for the certificate. Based on our analysis, we discovered:

- For 70% of our certificates, people registered within fifty-eight days of inquiry.

- Project Management, Health Care Risk Management, Six Sigma Green Belt, Business Analyst, and RN Refresher certificates had the lowest median lag times. Students made quicker decisions to register in these certificates compared to the other certificates.

- Advanced Paralegal, Advanced Project Management, Financial Planning, and PMP Prep exam had the largest median lag times. People took a longer time to register for these certificates. It is interesting to note that two of these certificates have been discontinued due to insufficient demand, the long lag time perhaps correlated with lack of market demand.

Table 1: Median Lag time by Noncredit Certificate Program, 2009-2015

| Noncredit Certificate Program | Median lag time(days) |

| Health Care Risk Management and Patient Safety | 55 |

| Six Sigma Green Belt Certificate | 55 |

| Business Analyst Certificate | 57 |

| Project Management Certificate Dover, Delaware | 57 |

| Lean Six Sigma Green Belt Certificate | 58 |

| Analytics: Optimizing Big Data Certificate | 58 |

| Paralegal Certificate | 58 |

| RN Refresher Clinical | 58 |

| RN Refresher Course | 58 |

| Project Management Certificate – Section 1 (Wilmington) | 59 |

| Project Management Bootcamp at the Beach (Lewes) | 60 |

| Social Media Marketing Strategy Certificate | 60 |

| Clinical Trials Management Certificate | 61 |

| Project Management Certificate Section 2 (Wilmington) | 61 |

| Strategic Human Capital Management Certificate | 69 |

| Finance for Non-Financial Managers Certificate | 70 |

| Advanced Project Management Certificate | 97 |

| Certificate in Financial Planning | 118 |

| PMP Exam Prep | 124 |

| Advanced Paralegal Certificate | 169 |

Inquiry Interest vs. Final Registration

We also wanted to know if and how registrations matched or mismatched with corresponding inquiries. We found:

- Of the 1,935 registrants, 94% registered for the certificate about which they initially inquired.

- 6% registered for different certificates.

This finding allows us to conclude that the large majority of our noncredit students knew which certificate they wanted to take before they contacted us. This means that our customer service team does not need to sell a menu of certificate choices to potential students. Rather, they can focus on helping students register for the certificate program about which they inquire.

Table 2 indicates the percent of matches to mismatches by certificate over the study time period. We only included certificates which are currently offered as of Fall 2015, omitting certificates which are no longer offered. The match percent represents students who registered for the certificate about which they initially inquired. The mismatch percent represents students who inquired about a certificate but registered in another certificate. Certificates with a negative mismatch percentage picked up registrants who did not initially inquire about the certificate.

Table 2: Inquiry vs. Registration by Certificate – Match/Mismatch

|

Certificate Registered by Student |

% Matches | % Mismatches |

| Health Care Risk Management Certificate | 81.03% | 18.97% |

| Social Media Marketing Certificate | 100.00% | 0.00% |

| Analytics: Optimizing Big Data Certificate | 104.84% | -4.84% |

| Clinical Trials Management Certificate | 91.89% | 8.11% |

| Business Analyst Certificate | 63.74% | 36.26% |

| Lean Six Sigma Green Belt Certificate | 96.18% | 3.82% |

| Paralegal Certificate | 89.78% | 10.22% |

| Project Management Certificate (all locations) | 101.09% | -1.09% |

| RN Refresher Certificate | 99.13% | 0.87% |

| Total | 94.37% | 5.63% |

Inquiry Sources of Registrants

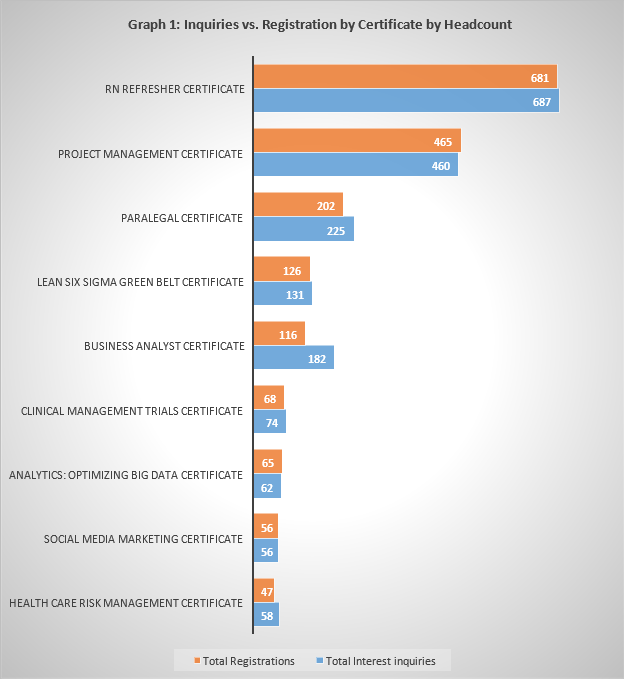

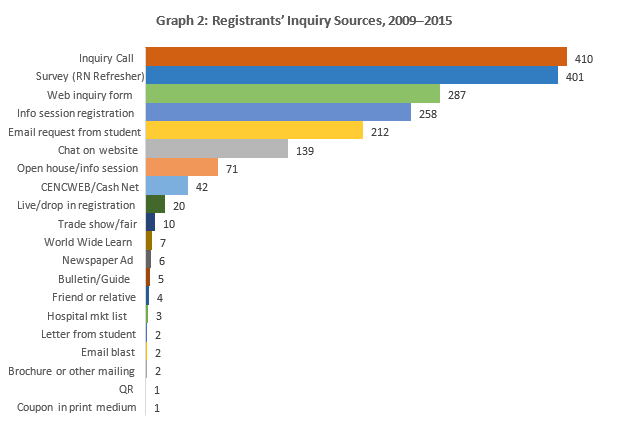

We also wanted to learn about how registrants preferred to contact us, the source of their inquiry. To do so, we evaluated the number and type of inquiries for the registrant population in the 2009–2015 timeframe. The inquiry channels that resulted in the highest number of conversions of inquirers into registered students were inquiry calls to our registration office, website chat function, email request to our office, information sessions about our certificates, our website, and/or surveys that we sent to potential students.

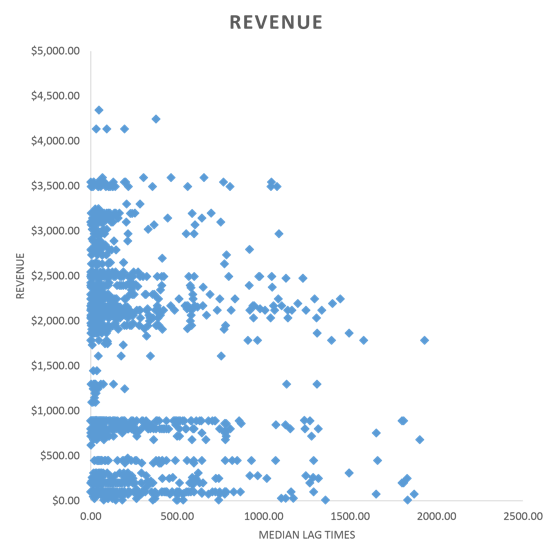

Lag time vs. Cost of Certificate

We wanted to know if registrant lag times had anything to do with the cost of the certificate for which they registered, that is, how does the cost of a certificate influence the time to register. We found a moderate relationship; individuals who registered quickly after inquiring (less than 30 days) ended up registering for costlier certificates than the ones who took longer to decide. People who chose less expensive offerings ended up taking the most time to decide. This finding is counterintuitive, though a contributing factor is whether the registrant’s employer is fully or partially paying for the certificate program. Our data did not allow us to assess any correlation between short lag times and partial or full employer paid status, though such an analysis could be run by a PCE. We know that roughly 25% of our certificate program registrants receive some support from their employer. This would indicate that some percentage of short lag time registrants could register quickly because their employer was paying for a portion of the certificate, but we do not yet have the data to support this hypothesis.

Another factor included in the short lag time registrants: those who register just prior to the start of a certificate usually pay full price rather than a discounted price because they miss the early-bird registration discount that we typically offer.

Graph 3: Revenue vs. Registration Lag Time, 2009–2015

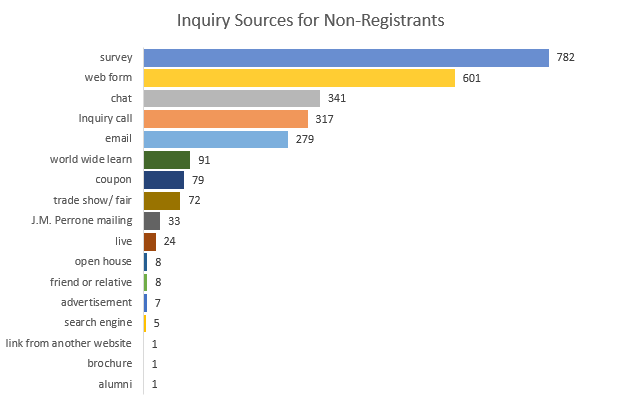

Report for Non-registrants in Certificate Programs, 2009 – 2015

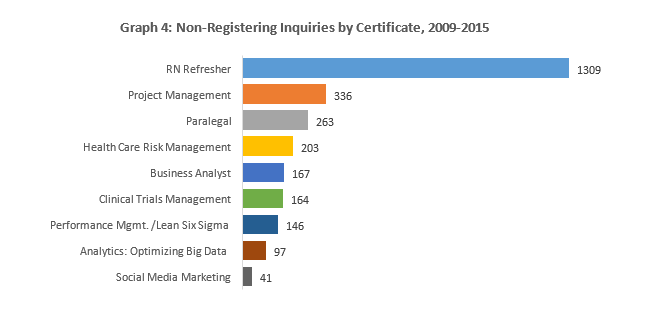

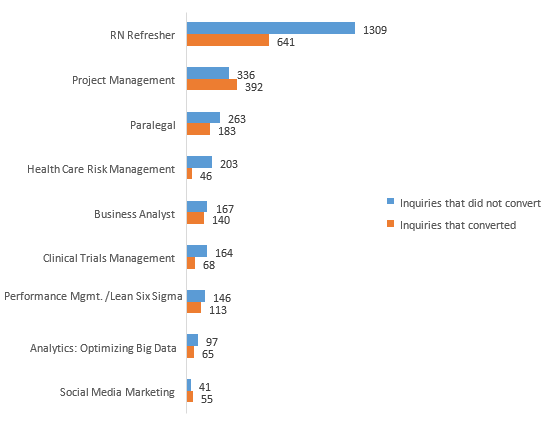

We also analyzed our database of non-registrants from 2009 through 2015. We wanted to know how the data differed from the registrants. The data set has 2,685 inquiries of people who inquired about certificates but did not end up registering. We analyzed data to evaluate, by certificate, all inquiries that did not convert into registrations and their inquiry source. We compared the results with inquiries that did convert into registrations. Certificates like RN Refresher, Project Management, Paralegal, Health Care Risk Management, and Business Analyst had large number of inquiries who did not register. Big Data Analyst and Social Media Marketing had comparatively low inquiries who did not register, which may in part be due to these two programs’ recent launch dates.

These findings can help our customer service team know the inquiries that have a higher chance of converting to a registration. In turn, this understanding can help them allocate their time so as to maximize conversions. Of course, we would use the same professional approach with each prospective student, but considering this historical conversion data can help a customer service team member determine how long to spend on the phone with an inquiring student.

The inquiry source for non-registrants did not differ much from the inquiry source for registrants. A large number of inquiries from non-registrants came through surveys, web forms, email requests, inquiry calls, and chats on the website.

Registration Conversion vs Non-Conversion by Interest Area

Next, we evaluated the percentage of inquiries between 2009–2015 that converted compared to those that did not convert. We calculated an overall conversion rate of 38%. Of the 4,429 inquires between 2009 and 2015, 62% did not convert, 38% did. At this time, we are not aware how this rate compares with other PCEs, as no benchmark data exists against which we can compare.

Table 3: Registration Conversion v.s Non-Conversion, 2009–2015

| Interest Area/Certificate | Total Inquiries | Inquiries that did not convert | % of Total | Inquiries that converted | % of Total |

| RN Refresher | 1950 | 1309 | 67.13% | 641 | 32.87% |

| Project Management | 728 | 336 | 46.15% | 392 | 53.85% |

| Paralegal | 446 | 263 | 58.97% | 183 | 41.03% |

| Health Care Risk Management | 249 | 203 | 81.53% | 46 | 18.47% |

| Business Analyst | 307 | 167 | 54.40% | 140 | 45.60% |

| Clinical Trials Management | 232 | 164 | 70.69% | 68 | 29.31% |

| Performance Management/Lean Six Sigma | 259 | 146 | 56.37% | 113 | 43.63% |

| Analytics: Optimizing Big Data | 162 | 97 | 59.88% | 65 | 40.12% |

| Social Media Marketing | 96 | 41 | 42.71% | 55 | 57.29% |

| Total | 4429 | 2726 | 61.55% | 1703 | 38.45% |

The data provides a useful aggregate picture of conversion rates by certificate. Also illustrated are the changes by year, though only the aggregate data is presented in the graph. This data can help our customer service team and certificate program coordinators better understand how many inquiries it typically take to fill a class. The next step is to better understand why prospective students chose not to register, and to use this knowledge to alter our programs or our customer service processes.

Graph 5: Registration Conversion vs. Non-Conversion by Certificate, 2009–2015

Understanding Student Motivations to Register: Qualitative Analysis

To better understand the registration motivations of short and long lag time students we compared the pre-program survey results for both student types. We used data from the Spring 2015 student cohort for this purpose. The survey asked:

- the student’s reason for enrollment

- the student’s influence to attend the University of Delaware

For comparison purposes, we grouped survey respondents into three categories:

- respondents from 2015 Spring Semester Step 1 Survey (pre-program) who registered within 30 days or less of inquiry (the short lag time students)

- all respondents from 2015 Spring Semester Step 1 Survey

- all respondents from 2011 Fall Semester through 2015 Spring Semester Step 1 Survey

Table 4: Students’ Reasons for Enrollment in Certificate Programs

| For Certificates Offered in Spring 2015 | 15S(Lag time<=30 days) | Average(11F – Current) | 15S all respondents |

| Reason for Enrollment | % | % | % |

| Change careers | 48.00% | 31.03% | 35.71% |

| Learn new skills to enhance my job performance | 80.00% | 65.38% | 69.05% |

| Advance my career | 72.00% | 71.95% | 71.43% |

| Management sent me | 4.00% | 2.94% | 7.14% |

| Re-enter the workforce | 12.00% | 11.43% | 11.90% |

| Earn CEUs, CPEs or other noncredit units | 12.00% | 15.21% | 9.52% |

Findings

- People who registered within a month of inquiry (the short lag time students) wanted to change careers and learn new skills to enhance job performance. These are the two areas in which respondents they stood out from the other two groups.

- For enrollment based on advancing one’s career, being sent by management, reentering the workforce, or earning noncredit units, all groups had similar responses for the reason for enrollment.

The first finding presents an intriguing line for further inquiry. If our marketing message emphasized learning new skills and advancing one’s career more than other outcomes, would more of our students register earlier? Would the number of short lag time registrants increase? This is worth considering and possibly testing in future marketing campaigns.

We used the same comparative statistics to understand differences between what influenced short and long lag time students to attend our certificate programs.

Table 5: Influence to Attend University of Delaware for Certificate Programs

| For Certificates Offered in Spring 2015 | 15S(Lag time<=30) | Average(11F – Current) | 15S all respondents |

| Influence to attend UD | % | % | % |

| Cost | 68.00% | 41.10% | 69.05% |

| Location | 64.00% | 60.70% | 61.90% |

| Class Size | 48.00% | 9.19% | 26.19% |

| Financial aid | 36.00% | 12.26% | 20.24% |

| Program offered | 56.00% | 81.84% | 35.71% |

| UD’s Brand Value | 72.00% | 47.79% | 54.76% |

Findings

- People who registered within a month of inquiry (the short lag time students) strongly considered class size, financial aid and UD’s brand value as reasons to attend UD.

- The analysis also shows that the short lag time students were not as influenced by program offered as the rest of the groups.

- For location, cost and program offerings, all groups were on par with each other.

The higher influence of financial aid among the short lag time students may indicate a correlation between short lag time registrants and the availability of financial aid. If we offered more financial aid, would students register more quickly, thus decreasing lag time? Another question worth considering is, if we offered time-bound discounts that are similar in impact as financial aid, but with less paperwork, could we prompt students to register earlier, which would also decreaing lag time? The other two influences cited by short lag time registrants—UD’s brand value and smaller class size—could be included in future marketing messages, with the goal of prompting prospects to consider our certificates and to register for them quickly.

Conclusion

Routine analysis of PCE registration data can yield intriguing marketing and registration findings that can decrease registration lag times, increase the inquiry to registrant conversion rate, and decrease registration labor time. These outcomes have the potential of increasing profitability for a PCE and freeing up labor for other purposes (developing more certificate programs, for example.) As described in this paper, PCEs can use our basic research questions to analyze their own registration database to find the data that is hiding in plain sight. Believe it or not, finding meaningful patterns and relationships in hidden registration data can be a fun, creative task. When analyzed with a sense of sustained curiosity and creativity, registration data can help a PCE unit educate its students more effectively, allocate scarce resources more economically, and please its campus and community partners more consistently.

George Irvine currently serves as Director of Corporate Programs and Partnerships in the University of Delaware’s Alfred Lerner College of Business and Economics. Prior to this position, he served as Assistant Director of Organizational Learning Solutions in the University of Delaware’s Division of Professional and Continuing Studies.

Suyash Gautam is a Business Analysis Consultant for JP Morgan Chase. In 2015, he earned is Master of Science (M.S.), Information Systems and Technology Management at the UD Lerner College of Business and Economics. In 2014-15, he served as a data analysis intern in the Division of Professional and Continuing Studies.